A Message Regarding Changes in Medicine and Test Questions:

ABIM is aware that, on occasion, for a small number of questions, changes in medicine (e.g., new practice guidelines) occur late in the examination publishing process and may alter what was previously the correct answer.

Do your best to answer all questions according to your understanding of current clinical principles and practice.

If ABIM determines that what was designed to be the correct answer has been changed by new information and there is no longer a single best response, this question will not be counted in the overall score.

Item Development & Review Structure

Three groups of subject matter experts are involved in developing and approving exam content.

- Item-Writing Task Force members – Develop all content for ABIM assessments

- Mentors – Advise new item writers throughout the item development process

- Approval Committee members – Review and approve all items before they are used on an exam

All item writers, mentors, and Approval Committee members must hold valid Board certification in internal medicine or an internal medicine subspecialty. ABIM strives to include broad representation from the relevant discipline to maintain the validity of assessments. Membership reflects diversity in race, age, gender, geographic location, and institutional setting.

Clinical expertise in the certification discipline is an essential requirement. All members are involved in direct patient care; full-time practitioner representation outside academic institutions is important to ensure job-relevant understanding of practice issues across the discipline.

Assessment Blueprint

A blueprint is a table of specifications that determines the content of each assessment. It is developed by the Approval Committee for each discipline and reviewed annually. The discipline's Specialty Board must also approve the exam blueprint. The blueprint is based on analyses of current practices and understanding of the relative importance of the clinical problems in the specialty area. Trainees, training program directors, and certified practitioners in the discipline are surveyed periodically to provide feedback and inform the blueprinting process. The blueprint also includes the target percentage of questions from each primary medical content category on the assessment. This ensures appropriate and equal representation of the content categories between assessment administrations. Changes to the composition in the blueprint usually follow trends in current practice. Select a blueprint for your specialty.

Question Format

Exams feature multiple-choice questions (MCQs) with a single best answer. Research has shown that scores obtained with MCQs are correlated with superior clinical performance; moreover, MCQs are particularly suitable for simulating clinical decision-making. The overwhelming majority of ABIM exam questions use a clinical stem (patient-based case scenario) format that assesses the higher-order cognitive abilities required for clinical decision-making. A small number of questions address specific knowledge points without the use of a clinical stem. ABIM examination questions include both Système International (SI) and imperial units for height (cm/in), weight (kg/lb), and temperature (C/F). View examples in Exam Tutorials.

Question Development

Basing question development on its associated blueprint helps guarantee that questions, and the overall assessment, are relevant and appropriate for the discipline. A gap analysis is performed to identify where additional questions are needed based on the blueprint. As a part of this review, underrepresented areas are identified and practice changes are considered. Question writing assignments then are given to members of the Item-Writing Task Force.

For each question, the writer first develops a “testing point” based on the blueprint and maps the question to a specific content area and task in the blueprint. The testing points address areas that the qualified examinee is expected to know without consulting medical resources or references. The level of difficulty for each testing point should reflect the measurement goal of the examination: to differentiate examinees who have the expertise required for certification from those who do not.

Question Review/Editing Process

Following initial development, questions are processed by ABIM, which includes reviewing for compliance with item-writing best practices, copyediting, and processing of any illustrations. Item-Writing Task Force members then have an opportunity to review and provide feedback on the edited questions.

All questions are also rigorously reviewed by the Approval Committee. The review process seeks to ensure that each question is fair, relevant, can be read and answered within a reasonable time by the examinee, and contains only one correct answer. Only questions that are approved by the Committee are eligible to move on to pretesting.

Approved questions are then proofread by editorial staff and prepared for production. Questions in the live item pool (i.e. used on previous assessments) are also evaluated for content and statistical performance for possible future use.

Pretesting

Pretesting is a standard practice in assessment development that allows testing of new questions without risk to the examinee. These questions are not counted in the examinee's score and are not identifiable to the examinee. Each pretest question is assessed according to statistical performance criteria before being accepted into the live pool of scorable questions.

Test Assembly and Administration

ABIM uses an Automated Test Assembly (ATA) program to build its assessments, which ensures a fair balance of content on each form of an assessment, reflecting the distribution of the items according to the blueprint as well as other specific criteria. ATA also utilizes statistical criteria to ensure that forms are comparably constructed with regard to difficulty, discrimination, relevance and other statistical constraints.

Most Certification examinations are administered annually. The traditional, 10-year Maintenance of Certification (MOC) examinations are administered one-to-two times annually based on the number of physicians in a given specialty. The Longitudinal Knowledge Assessment (LKA™) offers a self-paced option for physicians to acquire and demonstrate ongoing knowledge. The LKA includes a 5-year cycle in which physicians answer questions on an ongoing basis and receive feedback on how they are performing along the way.

Standard Setting

When assessments are used to make classification decisions such as pass/fail, content experts must determine how many questions need to be answered correctly to pass. This score is known as the “passing score.” The process of determining the passing score is known as standard setting. Standard setting procedures provide a systematic and thorough method for eliciting a passing score from a diverse set of content experts.

How does ABIM Determine the Passing Score for an Exam?

ABIM uses the standard setting procedure first described by William Angoff in 1971. The Angoff method is well supported in the literature and is the most popular item-centered standard setting procedure in use on credentialing exams today. In the Angoff method panels of content experts:

- Discuss and internalize the ability of the borderline examinee.

- Review test questions and evaluate the difficulty of each.

- Estimate the proportion of borderline examinees who will answer each question correctly.

The logic behind this method is that the sum of these proportions is the expected score for the borderline examinee, that is, the passing score for the exam.

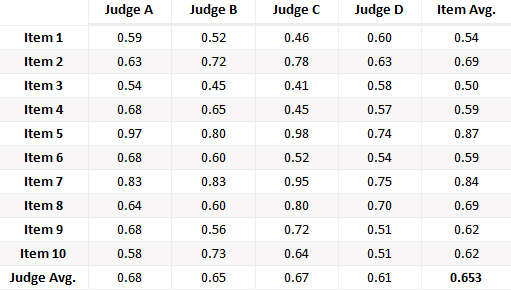

In the fictitious example above four content experts (A-D) provide ratings for 10 items. The average of these 40 ratings is the percent of items needed to pass the assessment, in this case, 65.3%. In an actual standard setting activity at ABIM dozens of content experts review and rate hundreds of test questions.

Who are the Content Experts?

ABIM invites a representative group of content experts, selected from the diplomate population, to participate in the standard setting meetings.

What is the Difference Between the Passing Score and the Pass Rates?

As noted above, the goal of standard setting is to determine a passing score. The passing score is the number of items an examinee has to answer correctly to pass the exam. Once the passing score is determined, it is held constant for a period of time so that all examinees are held to the same standard. However, in accordance with best practice in assessment, ABIM periodically consults with practicing physicians in your discipline to review and update the passing score using the process described above. This allows ABIM to ensure that the passing scores reflect appropriate and current expectations for examinee performance in the discipline.

The pass rate is the percent of examinees that pass the exam in a given administration. The pass rate can change over time due to differences in examinee performance.

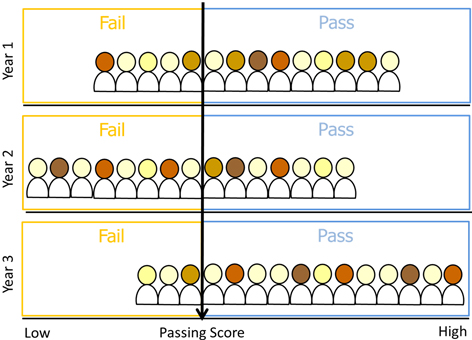

The example above shows three years of testing. The passing score, represented by the black line, is constant across all three years. The pass rates, however, change with each cohort of examinees.

- In year 1 the cohort is medium performing and the pass rate is about 65%.

- In year 2 the cohort is low performing and the pass rate is about 45%.

- In year 3 the cohort is high performing and the pass rate is about 80%.

It is important to note that ABIM does NOT set pass rates, ABIM sets the passing score. Once the passing score is established, the pass rates can vary depending on the performance of the examinees in a given cohort. Get more information on pass rates.

Additional Resources:

Angoff, WH (1971). Scales, norms, and equivalent scores. In R. L. Thorndike (Ed.), Educational measurement (2nd ed.; pp. 508-600). Washington, DC: American Council on Education.

Cizek GJ. Setting performance standards: Concepts, methods, and perspectives. Mahwah, NJ: Lawrence Erlbaum Associates. 2001.

Livingston SA, Zieky MJ. Passing scores: A manual for setting standards of performance on educational and occupational tests. Educational Testing Service. 1982.

Mills CN. Establishing passing standards. In J.C. Impara (Ed.), Licensure testing: Purposes, procedures, and practices. University of Nebraska-Lincoln: Buros Institute of Mental Measurements. 1995.

Berk RA. A consumer's guide to setting performance standards on criterion-referenced tests. Review of Educational Research. 1986;56:137-172.

Answer Key Validation

Recognizing that advances in medicine can change the accuracy of a test question and its keyed answer, after the assessment is administered, ABIM conducts a rigorous key validation process before final scores are released. The purpose of the key validation process is to ensure each test question is accurate and that medical knowledge in the area has not changed since it was last reviewed by the Approval Committee. Test questions are flagged after thorough examination of item statistics and/or comments made by examinees that suggest new information has emerged that may affect the correct answer. Test questions flagged as potentially needing key validation are sent to the Approval Committee Chair for review. If it is determined that any of the flagged questions is inaccurate based on its content, ABIM removes it from scoring and it will be removed from the live question pool.